diff --git a/CMakeLists.txt b/CMakeLists.txt

index 4c50e2a..8b800e0 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -1,4 +1,4 @@

-cmake_minimum_required (VERSION 3.0)

+cmake_minimum_required (VERSION 3.5)

project(whisper.cpp VERSION 1.4.2)

@@ -35,6 +35,12 @@ endif()

# options

+if (APPLE)

+ set(WHISPER_METAL_DEFAULT ON)

+else()

+ set(WHISPER_METAL_DEFAULT OFF)

+endif()

+

option(BUILD_SHARED_LIBS "whisper: build shared libs" ${BUILD_SHARED_LIBS_DEFAULT})

option(WHISPER_ALL_WARNINGS "whisper: enable all compiler warnings" ON)

@@ -58,6 +64,8 @@ option(WHISPER_OPENVINO "whisper: support for OpenVINO" OFF)

if (APPLE)

option(WHISPER_NO_ACCELERATE "whisper: disable Accelerate framework" OFF)

+ option(WHISPER_METAL "whisper: use Metal" ${WHISPER_METAL_DEFAULT})

+ option(WHISPER_METAL_NDEBUG "whisper: disable Metal debugging" OFF)

option(WHISPER_COREML "whisper: enable Core ML framework" OFF)

option(WHISPER_COREML_ALLOW_FALLBACK "whisper: allow non-CoreML fallback" OFF)

else()

@@ -113,6 +121,34 @@ if (APPLE)

endif()

endif()

+ if (WHISPER_METAL)

+ find_library(FOUNDATION_LIBRARY Foundation REQUIRED)

+ find_library(METAL_FRAMEWORK Metal REQUIRED)

+ find_library(METALKIT_FRAMEWORK MetalKit REQUIRED)

+

+ if (METAL_FRAMEWORK)

+ message(STATUS "Metal framework found")

+

+ set(WHISPER_EXTRA_LIBS ${WHISPER_EXTRA_LIBS}

+ ${FOUNDATION_LIBRARY}

+ ${METAL_FRAMEWORK}

+ ${METALKIT_FRAMEWORK}

+ )

+ set(WHISPER_EXTRA_FLAGS ${WHISPER_EXTRA_FLAGS} -DGGML_USE_METAL)

+

+ if (WHISPER_METAL_NDEBUG)

+ set(WHISPER_EXTRA_FLAGS ${WHISPER_EXTRA_FLAGS} -DGGML_METAL_NDEBUG)

+ endif()

+ else()

+ message(WARNING "Metal framework not found")

+ endif()

+

+ set(GGML_SOURCES_METAL ggml-metal.m ggml-metal.h)

+

+ # copy ggml-metal.metal to bin directory

+ configure_file(ggml-metal.metal bin/ggml-metal.metal COPYONLY)

+ endif()

+

if (WHISPER_COREML)

find_library(FOUNDATION_FRAMEWORK Foundation)

find_library(COREML_FRAMEWORK CoreML)

@@ -177,7 +213,7 @@ if (WHISPER_CUBLAS)

enable_language(CUDA)

- set(GGML_CUDA_SOURCES ggml-cuda.cu ggml-cuda.h)

+ set(GGML_SOURCES_CUDA ggml-cuda.cu ggml-cuda.h)

add_compile_definitions(GGML_USE_CUBLAS)

@@ -228,7 +264,7 @@ if (WHISPER_CLBLAST)

if (CLBlast_FOUND)

message(STATUS "CLBlast found")

- set(GGML_OPENCL_SOURCES ggml-opencl.cpp ggml-opencl.h)

+ set(GGML_SOURCES_OPENCL ggml-opencl.cpp ggml-opencl.h)

add_compile_definitions(GGML_USE_CLBLAST)

@@ -426,8 +462,11 @@ set(TARGET whisper)

add_library(${TARGET}

ggml.h

ggml.c

- ${GGML_CUDA_SOURCES}

- ${GGML_OPENCL_SOURCES}

+ ggml-alloc.h

+ ggml-alloc.c

+ ${GGML_SOURCES_METAL}

+ ${GGML_SOURCES_CUDA}

+ ${GGML_SOURCES_OPENCL}

whisper.h

whisper.cpp

)

@@ -468,9 +507,15 @@ if (BUILD_SHARED_LIBS)

WHISPER_BUILD

GGML_BUILD

)

+

+ if (WHISPER_METAL)

+ # TODO: I think this should make ggml-metal.m "see" the ggml-metal.metal file from the "bin" directory

+ # but for some reason it does not work here like it does in llama.cpp

+ set_target_properties(${TARGET} PROPERTIES RESOURCE "${CMAKE_CURRENT_SOURCE_DIR}/ggml-metal.metal")

+ endif()

endif()

-if (GGML_CUDA_SOURCES)

+if (GGML_SOURCES_CUDA)

message(STATUS "GGML CUDA sources found, configuring CUDA architecture")

set_property(TARGET whisper PROPERTY CUDA_ARCHITECTURES OFF)

set_property(TARGET whisper PROPERTY CUDA_SELECT_NVCC_ARCH_FLAGS "Auto")

@@ -486,10 +531,13 @@ target_compile_definitions(${TARGET} PUBLIC

set_target_properties(${TARGET} PROPERTIES PUBLIC_HEADER "whisper.h")

+include(GNUInstallDirs)

+

install(TARGETS ${TARGET}

- LIBRARY DESTINATION lib

- ARCHIVE DESTINATION lib/static

- RUNTIME DESTINATION bin

+ LIBRARY DESTINATION lib

+ ARCHIVE DESTINATION lib/static

+ RUNTIME DESTINATION bin

+ RESOURCE DESTINATION bin

PUBLIC_HEADER DESTINATION include

)

diff --git a/Makefile b/Makefile

index ecbbcff..2df5111 100644

--- a/Makefile

+++ b/Makefile

@@ -18,7 +18,7 @@ ifndef NVCC_VERSION

endif

endif

-CCV := $(shell $(CC) --version | head -n 1)

+CCV := $(shell $(CC) --version | head -n 1)

CXXV := $(shell $(CXX) --version | head -n 1)

# Mac OS + Arm can report x86_64

@@ -182,6 +182,15 @@ ifdef WHISPER_COREML_ALLOW_FALLBACK

endif

endif

+ifndef WHISPER_NO_METAL

+ ifeq ($(UNAME_S),Darwin)

+ WHISPER_METAL := 1

+

+ CXXFLAGS += -DGGML_USE_METAL

+ LDFLAGS += -framework Foundation -framework Metal -framework MetalKit

+ endif

+endif

+

ifdef WHISPER_OPENBLAS

CFLAGS += -DGGML_USE_OPENBLAS -I/usr/local/include/openblas -I/usr/include/openblas

LDFLAGS += -lopenblas

@@ -288,6 +297,11 @@ $(info )

ggml.o: ggml.c ggml.h ggml-cuda.h

$(CC) $(CFLAGS) -c $< -o $@

+ggml-alloc.o: ggml-alloc.c ggml.h ggml-alloc.h

+ $(CC) $(CFLAGS) -c $< -o $@

+

+WHISPER_OBJ += ggml-alloc.o

+

whisper.o: whisper.cpp whisper.h ggml.h ggml-cuda.h

$(CXX) $(CXXFLAGS) -c $< -o $@

@@ -303,6 +317,13 @@ whisper-encoder-impl.o: coreml/whisper-encoder-impl.m coreml/whisper-encoder-imp

WHISPER_OBJ += whisper.o whisper-encoder.o whisper-encoder-impl.o

endif

+ifdef WHISPER_METAL

+ggml-metal.o: ggml-metal.m ggml-metal.h

+ $(CC) $(CFLAGS) -c $< -o $@

+

+WHISPER_OBJ += ggml-metal.o

+endif

+

libwhisper.a: ggml.o $(WHISPER_OBJ)

$(AR) rcs libwhisper.a ggml.o $(WHISPER_OBJ)

diff --git a/README.md b/README.md

index 5f18060..3707b93 100644

--- a/README.md

+++ b/README.md

@@ -11,14 +11,14 @@ Beta: [v1.4.2](https://github.com/ggerganov/whisper.cpp/releases/tag/v1.4.2) / S

High-performance inference of [OpenAI's Whisper](https://github.com/openai/whisper) automatic speech recognition (ASR) model:

- Plain C/C++ implementation without dependencies

-- Apple silicon first-class citizen - optimized via ARM NEON, Accelerate framework and [Core ML](https://github.com/ggerganov/whisper.cpp#core-ml-support)

+- Apple Silicon first-class citizen - optimized via ARM NEON, Accelerate framework, Metal and [Core ML](https://github.com/ggerganov/whisper.cpp#core-ml-support)

- AVX intrinsics support for x86 architectures

- VSX intrinsics support for POWER architectures

- Mixed F16 / F32 precision

- [4-bit and 5-bit integer quantization support](https://github.com/ggerganov/whisper.cpp#quantization)

- Low memory usage (Flash Attention)

- Zero memory allocations at runtime

-- Runs on the CPU

+- Support for CPU-only inference

- [Partial GPU support for NVIDIA via cuBLAS](https://github.com/ggerganov/whisper.cpp#nvidia-gpu-support-via-cublas)

- [Partial OpenCL GPU support via CLBlast](https://github.com/ggerganov/whisper.cpp#opencl-gpu-support-via-clblast)

- [BLAS CPU support via OpenBLAS](https://github.com/ggerganov/whisper.cpp#blas-cpu-support-via-openblas)

@@ -50,6 +50,10 @@ You can also easily make your own offline voice assistant application: [command]

https://user-images.githubusercontent.com/1991296/204038393-2f846eae-c255-4099-a76d-5735c25c49da.mp4

+On Apply Silicon, the inference runs fully on the GPU via Metal:

+

+https://github.com/ggerganov/whisper.cpp/assets/1991296/c82e8f86-60dc-49f2-b048-d2fdbd6b5225

+

Or you can even run it straight in the browser: [talk.wasm](examples/talk.wasm)

## Implementation details

diff --git a/bindings/ios b/bindings/ios

index de46d9e..22a9eef 160000

--- a/bindings/ios

+++ b/bindings/ios

@@ -1 +1 @@

-Subproject commit de46d9e7817fe851c109d66080239d415812d32a

+Subproject commit 22a9eef021afc67f2154bc9811ed620b26299d1b

diff --git a/coreml/whisper-encoder.mm b/coreml/whisper-encoder.mm

index 6cd90ed..499edae 100644

--- a/coreml/whisper-encoder.mm

+++ b/coreml/whisper-encoder.mm

@@ -22,7 +22,13 @@ struct whisper_coreml_context * whisper_coreml_init(const char * path_model) {

NSURL * url_model = [NSURL fileURLWithPath: path_model_str];

- const void * data = CFBridgingRetain([[whisper_encoder_impl alloc] initWithContentsOfURL:url_model error:nil]);

+ // select which device to run the Core ML model on

+ MLModelConfiguration *config = [[MLModelConfiguration alloc] init];

+ config.computeUnits = MLComputeUnitsCPUAndGPU;

+ //config.computeUnits = MLComputeUnitsCPUAndNeuralEngine;

+ //config.computeUnits = MLComputeUnitsAll;

+

+ const void * data = CFBridgingRetain([[whisper_encoder_impl alloc] initWithContentsOfURL:url_model configuration:config error:nil]);

if (data == NULL) {

return NULL;

diff --git a/examples/bench/bench.cpp b/examples/bench/bench.cpp

index 49daaa0..ac0e6bb 100644

--- a/examples/bench/bench.cpp

+++ b/examples/bench/bench.cpp

@@ -44,13 +44,13 @@ void whisper_print_usage(int /*argc*/, char ** argv, const whisper_params & para

fprintf(stderr, " -t N, --threads N [%-7d] number of threads to use during computation\n", params.n_threads);

fprintf(stderr, " -m FNAME, --model FNAME [%-7s] model path\n", params.model.c_str());

fprintf(stderr, " -w N, --what N [%-7d] what to benchmark:\n", params.what);

- fprintf(stderr, " %-7s 0 - whisper encoder\n", "");

+ fprintf(stderr, " %-7s 0 - whisper\n", "");

fprintf(stderr, " %-7s 1 - memcpy\n", "");

fprintf(stderr, " %-7s 2 - ggml_mul_mat\n", "");

fprintf(stderr, "\n");

}

-int whisper_bench_encoder(const whisper_params & params) {

+int whisper_bench_full(const whisper_params & params) {

// whisper init

struct whisper_context * ctx = whisper_init_from_file(params.model.c_str());

@@ -69,12 +69,49 @@ int whisper_bench_encoder(const whisper_params & params) {

fprintf(stderr, "error: failed to set mel: %d\n", ret);

return 3;

}

-

+ // heat encoder

if (int ret = whisper_encode(ctx, 0, params.n_threads) != 0) {

fprintf(stderr, "error: failed to encode model: %d\n", ret);

return 4;

}

+ whisper_token tokens[512];

+ memset(tokens, 0, sizeof(tokens));

+

+ // prompt heat

+ if (int ret = whisper_decode(ctx, tokens, 256, 0, params.n_threads) != 0) {

+ fprintf(stderr, "error: failed to encode model: %d\n", ret);

+ return 4;

+ }

+

+ // text-generation heat

+ if (int ret = whisper_decode(ctx, tokens, 1, 256, params.n_threads) != 0) {

+ fprintf(stderr, "error: failed to encode model: %d\n", ret);

+ return 4;

+ }

+

+ whisper_reset_timings(ctx);

+

+ // actual run

+ if (int ret = whisper_encode(ctx, 0, params.n_threads) != 0) {

+ fprintf(stderr, "error: failed to encode model: %d\n", ret);

+ return 4;

+ }

+

+ for (int i = 0; i < 16; i++) {

+ if (int ret = whisper_decode(ctx, tokens, 256, 0, params.n_threads) != 0) {

+ fprintf(stderr, "error: failed to encode model: %d\n", ret);

+ return 4;

+ }

+ }

+

+ for (int i = 0; i < 256; i++) {

+ if (int ret = whisper_decode(ctx, tokens, 1, i, params.n_threads) != 0) {

+ fprintf(stderr, "error: failed to encode model: %d\n", ret);

+ return 4;

+ }

+ }

+

whisper_print_timings(ctx);

whisper_free(ctx);

@@ -103,7 +140,7 @@ int main(int argc, char ** argv) {

int ret = -1;

switch (params.what) {

- case 0: ret = whisper_bench_encoder(params); break;

+ case 0: ret = whisper_bench_full(params); break;

case 1: ret = whisper_bench_memcpy(params.n_threads); break;

case 2: ret = whisper_bench_ggml_mul_mat(params.n_threads); break;

default: fprintf(stderr, "error: unknown benchmark: %d\n", params.what); break;

diff --git a/examples/talk-llama/CMakeLists.txt b/examples/talk-llama/CMakeLists.txt

index cbdfb41..af5b547 100644

--- a/examples/talk-llama/CMakeLists.txt

+++ b/examples/talk-llama/CMakeLists.txt

@@ -7,7 +7,7 @@ if (WHISPER_SDL2)

# TODO: this is temporary

# need to export ggml symbols for MSVC, but too lazy ..

- add_executable(${TARGET} talk-llama.cpp llama.cpp ../common.cpp ../common-sdl.cpp ../../ggml.c ../../whisper.cpp)

+ add_executable(${TARGET} talk-llama.cpp llama.cpp ../common.cpp ../common-sdl.cpp ../../ggml.c ../../ggml-alloc.c ../../whisper.cpp)

target_include_directories(${TARGET} PRIVATE ${SDL2_INCLUDE_DIRS} ../../)

target_link_libraries(${TARGET} PRIVATE ${SDL2_LIBRARIES} ${CMAKE_THREAD_LIBS_INIT})

diff --git a/examples/whisper.android/app/src/main/jni/whisper/CMakeLists.txt b/examples/whisper.android/app/src/main/jni/whisper/CMakeLists.txt

index 55a4725..eac718a 100644

--- a/examples/whisper.android/app/src/main/jni/whisper/CMakeLists.txt

+++ b/examples/whisper.android/app/src/main/jni/whisper/CMakeLists.txt

@@ -8,6 +8,7 @@ set(WHISPER_LIB_DIR ${CMAKE_SOURCE_DIR}/../../../../../../../)

set(

SOURCE_FILES

${WHISPER_LIB_DIR}/ggml.c

+ ${WHISPER_LIB_DIR}/ggml-alloc.c

${WHISPER_LIB_DIR}/whisper.cpp

${CMAKE_SOURCE_DIR}/jni.c

)

@@ -20,7 +21,7 @@ function(build_library target_name)

SHARED

${SOURCE_FILES}

)

-

+

target_link_libraries(${target_name} ${LOG_LIB} android)

if (${target_name} STREQUAL "whisper_v8fp16_va")

diff --git a/examples/whisper.objc/README.md b/examples/whisper.objc/README.md

index 6833ebb..bb55653 100644

--- a/examples/whisper.objc/README.md

+++ b/examples/whisper.objc/README.md

@@ -28,6 +28,8 @@ This can significantly improve the performance of the transcription:

+## Core ML

+

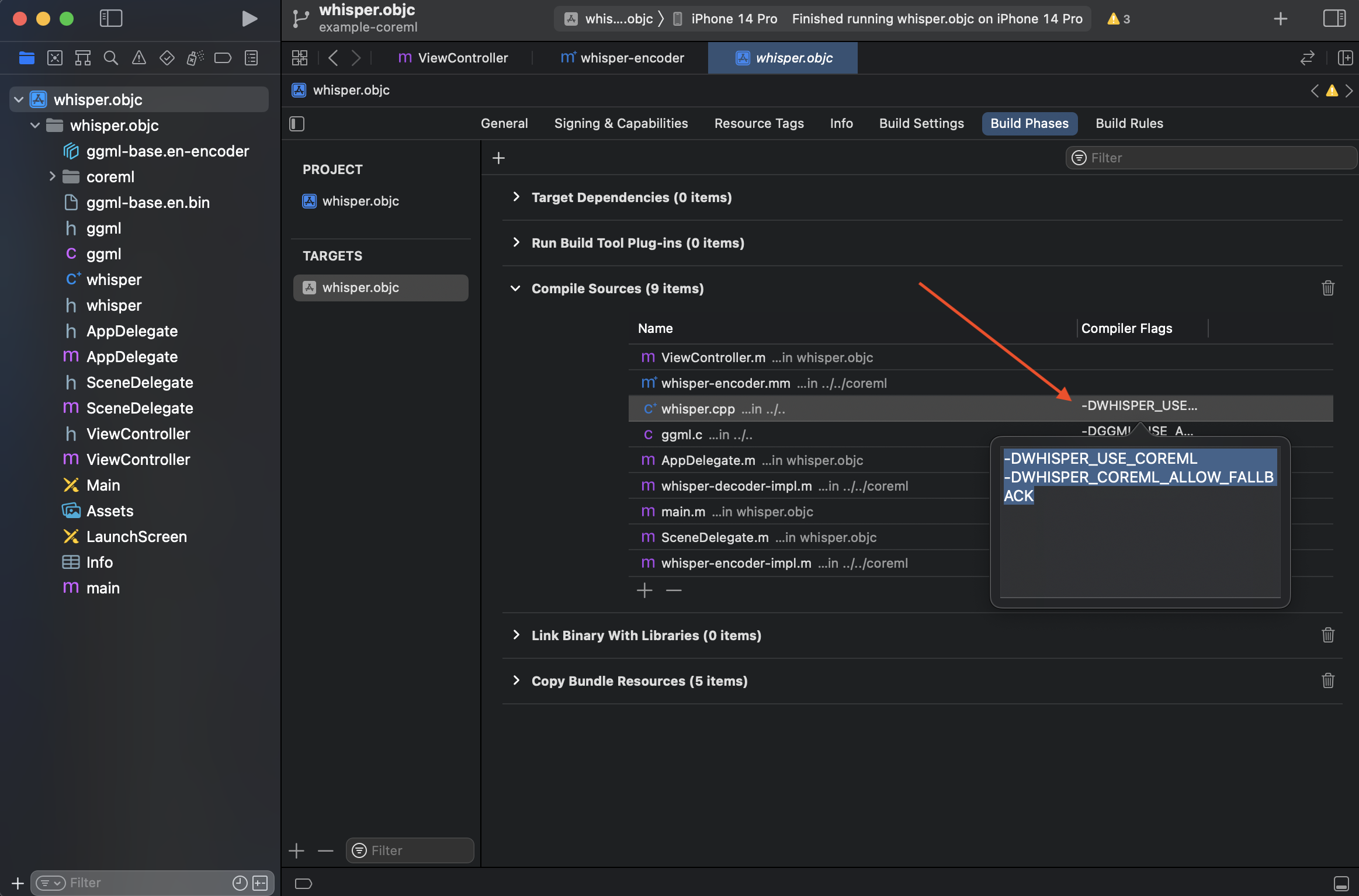

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases:

+## Core ML

+

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases:

@@ -35,3 +37,13 @@ If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DW

Then follow the [`Core ML support` section of readme](../../README.md#core-ml-support) for convert the model.

In this project, it also added `-O3 -DNDEBUG` to `Other C Flags`, but adding flags to app proj is not ideal in real world (applies to all C/C++ files), consider splitting xcodeproj in workspace in your own project.

+

+## Metal

+

+You can also enable Metal to make the inference run on the GPU of your device. This might or might not be more efficient

+compared to Core ML depending on the model and device that you use.

+

+To enable Metal, just add `-DGGML_USE_METAL` instead off the `-DWHISPER_USE_COREML` flag and you are ready.

+This will make both the Encoder and the Decoder run on the GPU.

+

+If you want to run the Encoder with Core ML and the Decoder with Metal then simply add both `-DWHISPER_USE_COREML -DGGML_USE_METAL` flags. That's all!

diff --git a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

index 49bd74e..f34b9c5 100644

--- a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

+++ b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

@@ -7,6 +7,9 @@

objects = {

/* Begin PBXBuildFile section */

+ 1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */ = {isa = PBXBuildFile; fileRef = 184447182AB211A2007D6BFE /* ggml-alloc.c */; };

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */ = {isa = PBXBuildFile; fileRef = 1844471B2AB21655007D6BFE /* ggml-metal.m */; settings = {COMPILER_FLAGS = "-framework Foundation -framework Metal -framework MetalKit -fno-objc-arc"; }; };

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */ = {isa = PBXBuildFile; fileRef = 1844471D2AB2195F007D6BFE /* ggml-metal.metal */; };

18627C7B29052BDF00BD2A04 /* AppDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7A29052BDF00BD2A04 /* AppDelegate.m */; };

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7D29052BDF00BD2A04 /* SceneDelegate.m */; };

18627C8129052BDF00BD2A04 /* ViewController.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8029052BDF00BD2A04 /* ViewController.m */; };

@@ -14,7 +17,7 @@

18627C8629052BE000BD2A04 /* Assets.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8529052BE000BD2A04 /* Assets.xcassets */; };

18627C8929052BE000BD2A04 /* LaunchScreen.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8729052BE000BD2A04 /* LaunchScreen.storyboard */; };

18627C8C29052BE000BD2A04 /* main.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8B29052BE000BD2A04 /* main.m */; };

- 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK"; }; };

+ 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML"; }; };

18627C9629052C5800BD2A04 /* ggml.c in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9529052C5800BD2A04 /* ggml.c */; settings = {COMPILER_FLAGS = "-DGGML_USE_ACCELERATE"; }; };

18627C9B29052CFF00BD2A04 /* ggml-base.en.bin in Resources */ = {isa = PBXBuildFile; fileRef = 18627C9A29052CFF00BD2A04 /* ggml-base.en.bin */; };

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */ = {isa = PBXBuildFile; fileRef = 7FE342452A0C3FA20015A058 /* whisper-encoder-impl.m */; };

@@ -23,7 +26,24 @@

7FE3424F2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc in Resources */ = {isa = PBXBuildFile; fileRef = 7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */; };

/* End PBXBuildFile section */

+/* Begin PBXCopyFilesBuildPhase section */

+ 184447202AB21B25007D6BFE /* CopyFiles */ = {

+ isa = PBXCopyFilesBuildPhase;

+ buildActionMask = 2147483647;

+ dstPath = "";

+ dstSubfolderSpec = 7;

+ files = (

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */,

+ );

+ runOnlyForDeploymentPostprocessing = 0;

+ };

+/* End PBXCopyFilesBuildPhase section */

+

/* Begin PBXFileReference section */

+ 184447182AB211A2007D6BFE /* ggml-alloc.c */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.c; name = "ggml-alloc.c"; path = "../../../ggml-alloc.c"; sourceTree = ""; };

+ 184447192AB211A2007D6BFE /* ggml-alloc.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; name = "ggml-alloc.h"; path = "../../../ggml-alloc.h"; sourceTree = ""; };

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.objc; name = "ggml-metal.m"; path = "../../../ggml-metal.m"; sourceTree = ""; };

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.metal; name = "ggml-metal.metal"; path = "../../../ggml-metal.metal"; sourceTree = ""; };

18627C7629052BDF00BD2A04 /* whisper.objc.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = whisper.objc.app; sourceTree = BUILT_PRODUCTS_DIR; };

18627C7929052BDF00BD2A04 /* AppDelegate.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = AppDelegate.h; sourceTree = ""; };

18627C7A29052BDF00BD2A04 /* AppDelegate.m */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.objc; path = AppDelegate.m; sourceTree = ""; };

@@ -80,6 +100,10 @@

18627C7829052BDF00BD2A04 /* whisper.objc */ = {

isa = PBXGroup;

children = (

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */,

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */,

+ 184447182AB211A2007D6BFE /* ggml-alloc.c */,

+ 184447192AB211A2007D6BFE /* ggml-alloc.h */,

7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */,

7FE342442A0C3FA20015A058 /* coreml */,

18627C9A29052CFF00BD2A04 /* ggml-base.en.bin */,

@@ -126,6 +150,7 @@

18627C7229052BDF00BD2A04 /* Sources */,

18627C7329052BDF00BD2A04 /* Frameworks */,

18627C7429052BDF00BD2A04 /* Resources */,

+ 184447202AB21B25007D6BFE /* CopyFiles */,

);

buildRules = (

);

@@ -194,8 +219,10 @@

18627C9629052C5800BD2A04 /* ggml.c in Sources */,

18627C7B29052BDF00BD2A04 /* AppDelegate.m in Sources */,

7FE3424D2A0C3FA20015A058 /* whisper-decoder-impl.m in Sources */,

+ 1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */,

18627C8C29052BE000BD2A04 /* main.m in Sources */,

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */,

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */,

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */,

);

runOnlyForDeploymentPostprocessing = 0;

diff --git a/examples/whisper.swiftui/whisper.swiftui.xcodeproj/project.pbxproj b/examples/whisper.swiftui/whisper.swiftui.xcodeproj/project.pbxproj

index ab9f688..d2d0b05 100644

--- a/examples/whisper.swiftui/whisper.swiftui.xcodeproj/project.pbxproj

+++ b/examples/whisper.swiftui/whisper.swiftui.xcodeproj/project.pbxproj

@@ -20,6 +20,7 @@

0AAC5DCC29539EB1003032C3 /* ggml.c in Sources */ = {isa = PBXBuildFile; fileRef = 0AAC5DC929539EB0003032C3 /* ggml.c */; settings = {COMPILER_FLAGS = "-DGGML_USE_ACCELERATE -Wno-shorten-64-to-32"; }; };

0AAC5DCE2953A05C003032C3 /* WhisperState.swift in Sources */ = {isa = PBXBuildFile; fileRef = 0AAC5DCD2953A05C003032C3 /* WhisperState.swift */; };

0AAC5DD12953A394003032C3 /* LibWhisper.swift in Sources */ = {isa = PBXBuildFile; fileRef = 0AAC5DD02953A394003032C3 /* LibWhisper.swift */; };

+ 18AED4812AB21F2B009D854F /* ggml-alloc.c in Sources */ = {isa = PBXBuildFile; fileRef = 18AED47F2AB21F2B009D854F /* ggml-alloc.c */; };

/* End PBXBuildFile section */

/* Begin PBXFileReference section */

@@ -41,6 +42,8 @@

0AAC5DCA29539EB0003032C3 /* ggml.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; path = ggml.h; sourceTree = ""; };

0AAC5DCD2953A05C003032C3 /* WhisperState.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = WhisperState.swift; sourceTree = ""; };

0AAC5DD02953A394003032C3 /* LibWhisper.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = LibWhisper.swift; sourceTree = ""; };

+ 18AED47F2AB21F2B009D854F /* ggml-alloc.c */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.c; path = "ggml-alloc.c"; sourceTree = ""; };

+ 18AED4802AB21F2B009D854F /* ggml-alloc.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; path = "ggml-alloc.h"; sourceTree = ""; };

/* End PBXFileReference section */

/* Begin PBXFrameworksBuildPhase section */

@@ -124,6 +127,8 @@

0AAC5DC529539E89003032C3 /* whisper.cpp */ = {

isa = PBXGroup;

children = (

+ 18AED47F2AB21F2B009D854F /* ggml-alloc.c */,

+ 18AED4802AB21F2B009D854F /* ggml-alloc.h */,

0AAC5DC929539EB0003032C3 /* ggml.c */,

0AAC5DCA29539EB0003032C3 /* ggml.h */,

0AAC5DC729539EB0003032C3 /* whisper.cpp */,

@@ -242,6 +247,7 @@

0AA7514C2953B569001EE061 /* RiffWaveUtils.swift in Sources */,

0AAC5DCB29539EB1003032C3 /* whisper.cpp in Sources */,

0AA7514E2953D958001EE061 /* Recorder.swift in Sources */,

+ 18AED4812AB21F2B009D854F /* ggml-alloc.c in Sources */,

);

runOnlyForDeploymentPostprocessing = 0;

};

@@ -369,7 +375,7 @@

CODE_SIGN_STYLE = Automatic;

CURRENT_PROJECT_VERSION = 1;

DEVELOPMENT_ASSET_PATHS = "\"whisper.swiftui.demo/Supporting files/Preview Content\"";

- DEVELOPMENT_TEAM = 3TZ9BM962G;

+ DEVELOPMENT_TEAM = P8JZH34X63;

ENABLE_HARDENED_RUNTIME = YES;

ENABLE_PREVIEWS = YES;

GENERATE_INFOPLIST_FILE = YES;

@@ -410,7 +416,7 @@

CODE_SIGN_STYLE = Automatic;

CURRENT_PROJECT_VERSION = 1;

DEVELOPMENT_ASSET_PATHS = "\"whisper.swiftui.demo/Supporting files/Preview Content\"";

- DEVELOPMENT_TEAM = 3TZ9BM962G;

+ DEVELOPMENT_TEAM = P8JZH34X63;

ENABLE_HARDENED_RUNTIME = YES;

ENABLE_PREVIEWS = YES;

GENERATE_INFOPLIST_FILE = YES;

diff --git a/extra/bench-all.sh b/extra/bench-all.sh

index 43f989d..352a223 100755

--- a/extra/bench-all.sh

+++ b/extra/bench-all.sh

@@ -44,27 +44,26 @@ if [ "$encoder_only" -eq 0 ]; then

printf "\n"

fi

-printf "| CPU | OS | Config | Model | Th | Load | Enc. | Commit |\n"

-printf "| --- | -- | ------ | ----- | -- | ---- | ---- | ------ |\n"

+printf "| %6s | %6s | %12s | %9s | %3s | %7s | %7s | %7s | %7s |\n" "CPU" "OS" "Config" "Model" "Th" "Enc." "Dec." "PP" "Commit"

+printf "| %6s | %6s | %12s | %9s | %3s | %7s | %7s | %7s | %7s |\n" "---" "---" "---" "---" "---" "---" "---" "---" "---"

for model in "${models[@]}"; do

- # run once to heat-up the cache

- ./bench -m ./models/ggml-$model.bin -t $n_threads 2>/dev/null 1>/dev/null

-

# actual run

# store stderr output in a variable in order to parse it later

output=$(./bench -m ./models/ggml-$model.bin -t $n_threads 2>&1)

ret=$?

# parse the output:

- load_time=$(echo "$output" | grep "load time" | awk '{print $5}')

- encode_time=$(echo "$output" | grep "encode time" | awk '{print $5}')

+ encode_time=$(echo "$output" | grep "encode time" | awk '{print $11}')

+ decode_time=$(echo "$output" | grep "decode time" | awk '{print $11}')

+ prompt_time=$(echo "$output" | grep "prompt time" | awk '{print $11}')

system_info=$(echo "$output" | grep "system_info")

n_threads=$(echo "$output" | grep "system_info" | awk '{print $4}')

# floor to milliseconds

- load_time=${load_time%.*}

- encode_time=${encode_time%.*}

+ #encode_time=${encode_time%.*}

+ #decode_time=${decode_time%.*}

+ #prompt_time=${prompt_time%.*}

config=""

@@ -87,6 +86,6 @@ for model in "${models[@]}"; do

commit=$(git rev-parse --short HEAD)

if [ $ret -eq 0 ]; then

- printf "| | | $config | $model | $n_threads | $load_time | $encode_time | $commit |\n"

+ printf "| | | %12s | %9s | %3s | %7s | %7s | %7s | %7s |\n" "$config" "$model" "$n_threads" "$encode_time" "$decode_time" "$prompt_time" "$commit"

fi

done

diff --git a/extra/sync-ggml.sh b/extra/sync-ggml.sh

index 3bd99e3..0070e9e 100755

--- a/extra/sync-ggml.sh

+++ b/extra/sync-ggml.sh

@@ -1,18 +1,20 @@

#!/bin/bash

-cp -rpv ../ggml/src/ggml.c ./ggml.c

-cp -rpv ../ggml/src/ggml-cuda.h ./ggml-cuda.h

-cp -rpv ../ggml/src/ggml-cuda.cu ./ggml-cuda.cu

-cp -rpv ../ggml/src/ggml-opencl.h ./ggml-opencl.h

-cp -rpv ../ggml/src/ggml-opencl.cpp ./ggml-opencl.cpp

-cp -rpv ../ggml/src/ggml-metal.h ./ggml-metal.h

-cp -rpv ../ggml/src/ggml-metal.m ./ggml-metal.m

-cp -rpv ../ggml/src/ggml-metal.metal ./ggml-metal.metal

-cp -rpv ../ggml/include/ggml/ggml.h ./ggml.h

-cp -rpv ../ggml/examples/common.h ./examples/common.h

-cp -rpv ../ggml/examples/common.cpp ./examples/common.cpp

-cp -rpv ../ggml/examples/common-ggml.h ./examples/common-ggml.h

-cp -rpv ../ggml/examples/common-ggml.cpp ./examples/common-ggml.cpp

+cp -rpv ../ggml/src/ggml.c ./ggml.c

+cp -rpv ../ggml/src/ggml-alloc.c ./ggml-alloc.c

+cp -rpv ../ggml/src/ggml-cuda.h ./ggml-cuda.h

+cp -rpv ../ggml/src/ggml-cuda.cu ./ggml-cuda.cu

+cp -rpv ../ggml/src/ggml-opencl.h ./ggml-opencl.h

+cp -rpv ../ggml/src/ggml-opencl.cpp ./ggml-opencl.cpp

+cp -rpv ../ggml/src/ggml-metal.h ./ggml-metal.h

+cp -rpv ../ggml/src/ggml-metal.m ./ggml-metal.m

+cp -rpv ../ggml/src/ggml-metal.metal ./ggml-metal.metal

+cp -rpv ../ggml/include/ggml/ggml.h ./ggml.h

+cp -rpv ../ggml/include/ggml/ggml-alloc.h ./ggml-alloc.h

+cp -rpv ../ggml/examples/common.h ./examples/common.h

+cp -rpv ../ggml/examples/common.cpp ./examples/common.cpp

+cp -rpv ../ggml/examples/common-ggml.h ./examples/common-ggml.h

+cp -rpv ../ggml/examples/common-ggml.cpp ./examples/common-ggml.cpp

cp -rpv ../ggml/examples/whisper/whisper.h ./whisper.h

cp -rpv ../ggml/examples/whisper/whisper.cpp ./whisper.cpp

diff --git a/ggml-alloc.c b/ggml-alloc.c

index 856a4cd..304964b 100644

--- a/ggml-alloc.c

+++ b/ggml-alloc.c

@@ -6,6 +6,26 @@

#include

#include

+#ifdef __has_include

+ #if __has_include()

+ #include

+ #if defined(_POSIX_MAPPED_FILES)

+ #include

+ #include

+ #endif

+ #endif

+#endif

+

+#if defined(_WIN32)

+ #define WIN32_LEAN_AND_MEAN

+ #ifndef NOMINMAX

+ #define NOMINMAX

+ #endif

+ #include

+ #include

+#endif

+

+

#define UNUSED(x) (void)(x)

#define MAX(a, b) ((a) > (b) ? (a) : (b))

#define GGML_MAX_CONCUR (2*GGML_MAX_NODES)

@@ -99,15 +119,28 @@ static void remove_allocated_tensor(struct ggml_allocr * alloc, struct ggml_tens

}

#endif

-

-static size_t ggml_allocator_get_alloc_size(struct ggml_allocr * alloc, struct ggml_tensor * tensor) {

+static size_t ggml_allocr_get_alloc_size(struct ggml_allocr * alloc, struct ggml_tensor * tensor) {

return ggml_nbytes(tensor);

UNUSED(alloc);

}

+// check if a tensor is allocated by this buffer

+static bool ggml_allocr_is_own(struct ggml_allocr * alloc, const struct ggml_tensor * tensor) {

+ void * ptr = tensor->data;

+ return ptr >= alloc->data && (char *)ptr < (char *)alloc->data + alloc->max_size;

+}

+

+static bool ggml_is_view(struct ggml_tensor * t) {

+ return t->view_src != NULL;

+}

+

void ggml_allocr_alloc(struct ggml_allocr * alloc, struct ggml_tensor * tensor) {

- size_t size = ggml_allocator_get_alloc_size(alloc, tensor);

+#ifdef GGML_ALLOCATOR_DEBUG

+ GGML_ASSERT(!ggml_is_view(tensor)); // views generally get data pointer from one of their sources

+ GGML_ASSERT(tensor->data == NULL); // avoid allocating tensor which already has memory allocated

+#endif

+ size_t size = ggml_allocr_get_alloc_size(alloc, tensor);

size = aligned_offset(NULL, size, alloc->alignment);

AT_PRINTF("%s: allocating %s (%zu bytes) - ", __func__, tensor->name, size);

@@ -131,14 +164,14 @@ void ggml_allocr_alloc(struct ggml_allocr * alloc, struct ggml_tensor * tensor)

if (best_fit_block == -1) {

// the last block is our last resort

struct free_block * block = &alloc->free_blocks[alloc->n_free_blocks - 1];

+ max_avail = MAX(max_avail, block->size);

if (block->size >= size) {

best_fit_block = alloc->n_free_blocks - 1;

- max_avail = MAX(max_avail, block->size);

} else {

fprintf(stderr, "%s: not enough space in the buffer (needed %zu, largest block available %zu)\n",

__func__, size, max_avail);

GGML_ASSERT(!"not enough space in the buffer");

- return;

+ return;

}

}

struct free_block * block = &alloc->free_blocks[best_fit_block];

@@ -173,17 +206,17 @@ void ggml_allocr_alloc(struct ggml_allocr * alloc, struct ggml_tensor * tensor)

}

// this is a very naive implementation, but for our case the number of free blocks should be very small

-static void ggml_allocator_free_tensor(struct ggml_allocr * alloc, struct ggml_tensor * tensor) {

+static void ggml_allocr_free_tensor(struct ggml_allocr * alloc, struct ggml_tensor * tensor) {

void * ptr = tensor->data;

- if (ptr < alloc->data || (char*)ptr >= (char*)alloc->data + alloc->max_size) {

+ if (ggml_allocr_is_own(alloc, tensor) == false) {

// the tensor was not allocated in this buffer

// this can happen because the graph allocator will try to free weights and other tensors from different buffers

// the easiest way to deal with this is just to ignore it

return;

}

- size_t size = ggml_allocator_get_alloc_size(alloc, tensor);

+ size_t size = ggml_allocr_get_alloc_size(alloc, tensor);

size = aligned_offset(NULL, size, alloc->alignment);

AT_PRINTF("%s: freeing %s (%zu bytes) - n_free_blocks = %d\n", __func__, tensor->name, size, alloc->n_free_blocks);

@@ -277,17 +310,68 @@ struct ggml_allocr * ggml_allocr_new(void * data, size_t size, size_t alignment)

return alloc;

}

-// address and size of the buffer when measuring

-// it needs to be large enough to fit all the tensors, but it cannot overlap with other existing buffers

-static void * const MEASURE_BASE_ADDR = (void *) 0x1000;

-static const size_t MEASURE_MAX_SIZE = 1ULL<<40; // 1 TB

+// OS specific functions to allocate and free uncommitted virtual memory

+static void * alloc_vmem(size_t size) {

+#if defined(_WIN32)

+ return VirtualAlloc(NULL, size, MEM_RESERVE, PAGE_NOACCESS);

+#elif defined(_POSIX_MAPPED_FILES)

+ void * ptr = mmap(NULL, size, PROT_NONE, MAP_PRIVATE | MAP_ANON, -1, 0);

+ if (ptr == MAP_FAILED) {

+ return NULL;

+ }

+ return ptr;

+#else

+ // use a fixed address for other platforms

+ uintptr_t base_addr = (uintptr_t)-size - 0x100;

+ return (void *)base_addr;

+#endif

+}

+

+static void free_vmem(void * base_addr, size_t size) {

+#if defined(_WIN32)

+ VirtualFree(base_addr, 0, MEM_RELEASE);

+ UNUSED(size);

+#elif defined(_POSIX_MAPPED_FILES)

+ munmap(base_addr, size);

+#else

+ // nothing to do

+ UNUSED(base_addr);

+ UNUSED(size);

+#endif

+}

+

+// allocate uncommitted virtual memory to measure the size of the graph

+static void alloc_measure_vmem(void ** base_addr, size_t * size) {

+ // 128GB for 64-bit, 1GB for 32-bit

+ *size = sizeof(void *) == 4 ? 1ULL<<30 : 1ULL<<37;

+ do {

+ *base_addr = alloc_vmem(*size);

+ if (*base_addr != NULL) {

+ AT_PRINTF("allocated %.2f GB of virtual memory for measure buffer at %p\n", *size / 1024.0 / 1024.0 / 1024.0, *base_addr);

+ return;

+ }

+ // try again with half the size

+ *size /= 2;

+ } while (*size > 0);

+

+ GGML_ASSERT(!"failed to allocate virtual memory for measure buffer");

+}

+

+static void free_measure_vmem(void * base_addr, size_t size) {

+ free_vmem(base_addr, size);

+}

struct ggml_allocr * ggml_allocr_new_measure(size_t alignment) {

struct ggml_allocr * alloc = (struct ggml_allocr *)malloc(sizeof(struct ggml_allocr) /* + n_free_blocks * sizeof(struct free_block) */);

+ void * base_addr;

+ size_t size;

+

+ alloc_measure_vmem(&base_addr, &size);

+

*alloc = (struct ggml_allocr){

- /*.data = */ MEASURE_BASE_ADDR,

- /*.size = */ MEASURE_MAX_SIZE,

+ /*.data = */ base_addr,

+ /*.size = */ size,

/*.alignment = */ alignment,

/*.n_free_blocks = */ 0,

/*.free_blocks = */ {{0}},

@@ -307,6 +391,9 @@ struct ggml_allocr * ggml_allocr_new_measure(size_t alignment) {

}

void ggml_allocr_free(struct ggml_allocr * alloc) {

+ if (alloc->measure) {

+ free_measure_vmem(alloc->data, alloc->size);

+ }

free(alloc);

}

@@ -316,11 +403,6 @@ bool ggml_allocr_is_measure(struct ggml_allocr * alloc) {

//////////// compute graph allocator

-static bool ggml_is_view(struct ggml_tensor * t) {

- return t->op == GGML_OP_RESHAPE || t->op == GGML_OP_VIEW || t->op == GGML_OP_TRANSPOSE ||

- t->op == GGML_OP_PERMUTE || t->op == GGML_OP_CPY;

-}

-

static bool ggml_are_same_layout(const struct ggml_tensor * a, const struct ggml_tensor * b) {

if (a->type != b->type) {

return false;

@@ -336,28 +418,6 @@ static bool ggml_are_same_layout(const struct ggml_tensor * a, const struct ggml

return true;

}

-static struct ggml_tensor * get_view_parent(struct ggml_tensor * t) {

- switch (t->op) {

- case GGML_OP_PERMUTE:

- case GGML_OP_RESHAPE:

- case GGML_OP_TRANSPOSE:

- case GGML_OP_VIEW:

- return t->src[0];

- case GGML_OP_CPY:

- return t->src[1];

- default:

- return NULL;

- }

-}

-

-static struct ggml_tensor * get_view_source(struct ggml_tensor * t) {

- struct ggml_tensor * parent = t;

- do {

- parent = get_view_parent(parent);

- } while (ggml_is_view(parent));

- return parent;

-}

-

static bool ggml_op_can_inplace(enum ggml_op op) {

switch (op) {

case GGML_OP_SCALE:

@@ -365,7 +425,6 @@ static bool ggml_op_can_inplace(enum ggml_op op) {

case GGML_OP_DIAG_MASK_INF:

case GGML_OP_ADD:

case GGML_OP_ADD1:

- case GGML_OP_ACC:

case GGML_OP_SUB:

case GGML_OP_MUL:

case GGML_OP_DIV:

@@ -375,10 +434,8 @@ static bool ggml_op_can_inplace(enum ggml_op op) {

case GGML_OP_UNARY:

case GGML_OP_ROPE:

case GGML_OP_RMS_NORM:

- case GGML_OP_SET:

case GGML_OP_SOFT_MAX:

case GGML_OP_CONT:

- case GGML_OP_ADD_REL_POS:

return true;

default:

@@ -390,24 +447,8 @@ static void allocate_node(struct ggml_allocr * alloc, struct ggml_tensor * node)

struct hash_node * ht = alloc->hash_table;

if (node->data == NULL) {

if (ggml_is_view(node)) {

- size_t offset;

- switch(node->op) {

- case GGML_OP_VIEW:

- memcpy(&offset, node->op_params, sizeof(size_t));

- node->data = (char *) node->src[0]->data + offset;

- break;

- case GGML_OP_PERMUTE:

- case GGML_OP_RESHAPE:

- case GGML_OP_TRANSPOSE:

- node->data = node->src[0]->data;

- break;

- case GGML_OP_CPY:

- node->data = node->src[1]->data;

- break;

- default:

- GGML_ASSERT(!"unknown view op");

- break;

- }

+ assert(node->view_src->data != NULL);

+ node->data = (char *)node->view_src->data + node->view_offs;

} else {

// see if we can reuse a parent's buffer (inplace)

if (ggml_op_can_inplace(node->op)) {

@@ -418,8 +459,7 @@ static void allocate_node(struct ggml_allocr * alloc, struct ggml_tensor * node)

}

// if the node's data is external, then we cannot re-use it

- if ((char *) parent->data < (char *) alloc->data ||

- (char *) parent->data >= ((char *) alloc->data + alloc->size)) {

+ if (ggml_allocr_is_own(alloc, parent) == false) {

AT_PRINTF("not reusing parent %s for %s as %p is external\n", parent->name, node->name, parent->data);

continue;

}

@@ -427,7 +467,7 @@ static void allocate_node(struct ggml_allocr * alloc, struct ggml_tensor * node)

struct hash_node * p_hn = hash_get(ht, parent);

if (parent->data != NULL && p_hn->n_children == 1 && p_hn->n_views == 0 && ggml_are_same_layout(node, parent)) {

if (ggml_is_view(parent)) {

- struct ggml_tensor * view_src = get_view_source(parent);

+ struct ggml_tensor * view_src = parent->view_src;

struct hash_node * view_src_hn = hash_get(ht, view_src);

if (view_src_hn->n_views == 1 && view_src_hn->n_children == 0 && view_src->data == parent->data) {

// TODO: the offset of the view parent must be kept to ensure that the op doesn't overwrite

@@ -453,7 +493,7 @@ static void allocate_node(struct ggml_allocr * alloc, struct ggml_tensor * node)

}

}

-static size_t ggml_allocator_alloc_graph_tensors_n(

+static size_t ggml_allocr_alloc_graph_tensors_n(

struct ggml_allocr * alloc,

struct ggml_cgraph ** graphs, int n_graphs,

struct ggml_tensor *** inputs, struct ggml_tensor *** outputs) {

@@ -469,7 +509,7 @@ static size_t ggml_allocator_alloc_graph_tensors_n(

struct ggml_tensor * node = gf->nodes[i];

if (ggml_is_view(node)) {

- struct ggml_tensor * view_src = get_view_source(node);

+ struct ggml_tensor * view_src = node->view_src;

hash_get(ht, view_src)->n_views += 1;

}

@@ -531,11 +571,10 @@ static size_t ggml_allocator_alloc_graph_tensors_n(

AT_PRINTF("\n");

}

-

// update parents

// update immediately if there is no parse_seq

// update only at barriers if there is parse_seq

- if ((alloc->parse_seq_len==0) || alloc->parse_seq[ind] == -1) {

+ if ((alloc->parse_seq_len == 0) || alloc->parse_seq[ind] == -1) {

int update_start = alloc->parse_seq_len ? last_barrier_pos : ind;

int update_end = alloc->parse_seq_len ? ind : ind + 1;

for (int i = update_start; i < update_end; i++) {

@@ -554,17 +593,17 @@ static size_t ggml_allocator_alloc_graph_tensors_n(

if (p_hn->n_children == 0 && p_hn->n_views == 0) {

if (ggml_is_view(parent)) {

- struct ggml_tensor * view_src = get_view_source(parent);

+ struct ggml_tensor * view_src = parent->view_src;

struct hash_node * view_src_hn = hash_get(ht, view_src);

view_src_hn->n_views -= 1;

AT_PRINTF("view_src %s: %d children, %d views\n", view_src->name, view_src_hn->n_children, view_src_hn->n_views);

if (view_src_hn->n_views == 0 && view_src_hn->n_children == 0 && view_src->data != node->data) {

- ggml_allocator_free_tensor(alloc, view_src);

+ ggml_allocr_free_tensor(alloc, view_src);

}

}

else {

if (parent->data != node->data) {

- ggml_allocator_free_tensor(alloc, parent);

+ ggml_allocr_free_tensor(alloc, parent);

}

}

}

@@ -581,7 +620,7 @@ static size_t ggml_allocator_alloc_graph_tensors_n(

for (int i = 0; outputs[g][i] != NULL; i++) {

struct ggml_tensor * output = outputs[g][i];

AT_PRINTF("output: %s\n", output->name);

- ggml_allocator_free_tensor(alloc, output);

+ ggml_allocr_free_tensor(alloc, output);

}

}

}

@@ -590,5 +629,5 @@ static size_t ggml_allocator_alloc_graph_tensors_n(

}

size_t ggml_allocr_alloc_graph(struct ggml_allocr * alloc, struct ggml_cgraph * graph) {

- return ggml_allocator_alloc_graph_tensors_n(alloc, &graph, 1, NULL, NULL);

+ return ggml_allocr_alloc_graph_tensors_n(alloc, &graph, 1, NULL, NULL);

}

diff --git a/ggml-metal.m b/ggml-metal.m

index 7e2355c..b438b83 100644

--- a/ggml-metal.m

+++ b/ggml-metal.m

@@ -63,7 +63,10 @@ struct ggml_metal_context {

GGML_METAL_DECL_KERNEL(relu);

GGML_METAL_DECL_KERNEL(gelu);

GGML_METAL_DECL_KERNEL(soft_max);

+ GGML_METAL_DECL_KERNEL(soft_max_4);

GGML_METAL_DECL_KERNEL(diag_mask_inf);

+ GGML_METAL_DECL_KERNEL(diag_mask_inf_8);

+ GGML_METAL_DECL_KERNEL(get_rows_f32);

GGML_METAL_DECL_KERNEL(get_rows_f16);

GGML_METAL_DECL_KERNEL(get_rows_q4_0);

GGML_METAL_DECL_KERNEL(get_rows_q4_1);

@@ -77,6 +80,7 @@ struct ggml_metal_context {

GGML_METAL_DECL_KERNEL(norm);

GGML_METAL_DECL_KERNEL(mul_mat_f16_f32);

GGML_METAL_DECL_KERNEL(mul_mat_f16_f32_1row);

+ GGML_METAL_DECL_KERNEL(mul_mat_f16_f32_l4);

GGML_METAL_DECL_KERNEL(mul_mat_q4_0_f32);

GGML_METAL_DECL_KERNEL(mul_mat_q4_1_f32);

GGML_METAL_DECL_KERNEL(mul_mat_q8_0_f32);

@@ -117,14 +121,17 @@ static NSString * const msl_library_source = @"see metal.metal";

struct ggml_metal_context * ggml_metal_init(int n_cb) {

metal_printf("%s: allocating\n", __func__);

- // Show all the Metal device instances in the system

- NSArray * devices = MTLCopyAllDevices();

id device;

NSString * s;

+

+#if TARGET_OS_OSX

+ // Show all the Metal device instances in the system

+ NSArray * devices = MTLCopyAllDevices();

for (device in devices) {

s = [device name];

metal_printf("%s: found device: %s\n", __func__, [s UTF8String]);

}

+#endif

// Pick and show default Metal device

device = MTLCreateSystemDefaultDevice();

@@ -139,14 +146,22 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

ctx->n_buffers = 0;

ctx->concur_list_len = 0;

- ctx->d_queue = dispatch_queue_create("llama.cpp", DISPATCH_QUEUE_CONCURRENT);

+ ctx->d_queue = dispatch_queue_create("ggml-metal", DISPATCH_QUEUE_CONCURRENT);

-#if 0

- // compile from source string and show compile log

+#ifdef GGML_SWIFT

+ // load the default.metallib file

{

NSError * error = nil;

- ctx->library = [ctx->device newLibraryWithSource:msl_library_source options:nil error:&error];

+ NSBundle * bundle = [NSBundle bundleForClass:[GGMLMetalClass class]];

+ NSString * llamaBundlePath = [bundle pathForResource:@"llama_llama" ofType:@"bundle"];

+ NSBundle * llamaBundle = [NSBundle bundleWithPath:llamaBundlePath];

+ NSString * libPath = [llamaBundle pathForResource:@"default" ofType:@"metallib"];

+ NSURL * libURL = [NSURL fileURLWithPath:libPath];

+

+ // Load the metallib file into a Metal library

+ ctx->library = [ctx->device newLibraryWithURL:libURL error:&error];

+

if (error) {

metal_printf("%s: error: %s\n", __func__, [[error description] UTF8String]);

return NULL;

@@ -161,7 +176,7 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

//NSString * path = [[NSBundle mainBundle] pathForResource:@"../../examples/metal/metal" ofType:@"metal"];

NSBundle * bundle = [NSBundle bundleForClass:[GGMLMetalClass class]];

- NSString * path = [bundle pathForResource:@"ggml-metal" ofType:@"metal"];

+ NSString * path = [bundle pathForResource:@"ggml-metal" ofType:@"metal"];

metal_printf("%s: loading '%s'\n", __func__, [path UTF8String]);

NSString * src = [NSString stringWithContentsOfFile:path encoding:NSUTF8StringEncoding error:&error];

@@ -207,7 +222,10 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

GGML_METAL_ADD_KERNEL(relu);

GGML_METAL_ADD_KERNEL(gelu);

GGML_METAL_ADD_KERNEL(soft_max);

+ GGML_METAL_ADD_KERNEL(soft_max_4);

GGML_METAL_ADD_KERNEL(diag_mask_inf);

+ GGML_METAL_ADD_KERNEL(diag_mask_inf_8);

+ GGML_METAL_ADD_KERNEL(get_rows_f32);

GGML_METAL_ADD_KERNEL(get_rows_f16);

GGML_METAL_ADD_KERNEL(get_rows_q4_0);

GGML_METAL_ADD_KERNEL(get_rows_q4_1);

@@ -221,6 +239,7 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

GGML_METAL_ADD_KERNEL(norm);

GGML_METAL_ADD_KERNEL(mul_mat_f16_f32);

GGML_METAL_ADD_KERNEL(mul_mat_f16_f32_1row);

+ GGML_METAL_ADD_KERNEL(mul_mat_f16_f32_l4);

GGML_METAL_ADD_KERNEL(mul_mat_q4_0_f32);

GGML_METAL_ADD_KERNEL(mul_mat_q4_1_f32);

GGML_METAL_ADD_KERNEL(mul_mat_q8_0_f32);

@@ -247,13 +266,15 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

#undef GGML_METAL_ADD_KERNEL

}

- metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

metal_printf("%s: hasUnifiedMemory = %s\n", __func__, ctx->device.hasUnifiedMemory ? "true" : "false");

+#if TARGET_OS_OSX

+ metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

if (ctx->device.maxTransferRate != 0) {

metal_printf("%s: maxTransferRate = %8.2f MB/s\n", __func__, ctx->device.maxTransferRate / 1024.0 / 1024.0);

} else {

metal_printf("%s: maxTransferRate = built-in GPU\n", __func__);

}

+#endif

return ctx;

}

@@ -273,7 +294,10 @@ void ggml_metal_free(struct ggml_metal_context * ctx) {

GGML_METAL_DEL_KERNEL(relu);

GGML_METAL_DEL_KERNEL(gelu);

GGML_METAL_DEL_KERNEL(soft_max);

+ GGML_METAL_DEL_KERNEL(soft_max_4);

GGML_METAL_DEL_KERNEL(diag_mask_inf);

+ GGML_METAL_DEL_KERNEL(diag_mask_inf_8);

+ GGML_METAL_DEL_KERNEL(get_rows_f32);

GGML_METAL_DEL_KERNEL(get_rows_f16);

GGML_METAL_DEL_KERNEL(get_rows_q4_0);

GGML_METAL_DEL_KERNEL(get_rows_q4_1);

@@ -287,6 +311,7 @@ void ggml_metal_free(struct ggml_metal_context * ctx) {

GGML_METAL_DEL_KERNEL(norm);

GGML_METAL_DEL_KERNEL(mul_mat_f16_f32);

GGML_METAL_DEL_KERNEL(mul_mat_f16_f32_1row);

+ GGML_METAL_DEL_KERNEL(mul_mat_f16_f32_l4);

GGML_METAL_DEL_KERNEL(mul_mat_q4_0_f32);

GGML_METAL_DEL_KERNEL(mul_mat_q4_1_f32);

GGML_METAL_DEL_KERNEL(mul_mat_q8_0_f32);

@@ -365,6 +390,7 @@ static id ggml_metal_get_buffer(struct ggml_metal_context * ctx, stru

for (int i = 0; i < ctx->n_buffers; ++i) {

const int64_t ioffs = (int64_t) t->data - (int64_t) ctx->buffers[i].data;

+ //metal_printf("ioffs = %10ld, tsize = %10ld, sum = %10ld, ctx->buffers[%d].size = %10ld, name = %s\n", ioffs, tsize, ioffs + tsize, i, ctx->buffers[i].size, ctx->buffers[i].name);

if (ioffs >= 0 && ioffs + tsize <= (int64_t) ctx->buffers[i].size) {

*offs = (size_t) ioffs;

@@ -454,6 +480,7 @@ bool ggml_metal_add_buffer(

}

}

+#if TARGET_OS_OSX

metal_printf(", (%8.2f / %8.2f)",

ctx->device.currentAllocatedSize / 1024.0 / 1024.0,

ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

@@ -463,6 +490,9 @@ bool ggml_metal_add_buffer(

} else {

metal_printf("\n");

}

+#else

+ metal_printf(", (%8.2f)\n", ctx->device.currentAllocatedSize / 1024.0 / 1024.0);

+#endif

}

return true;

@@ -698,6 +728,7 @@ void ggml_metal_graph_compute(

case GGML_OP_ADD:

{

GGML_ASSERT(ggml_is_contiguous(src0));

+ GGML_ASSERT(ggml_is_contiguous(src1));

// utilize float4

GGML_ASSERT(ne00 % 4 == 0);

@@ -705,6 +736,7 @@ void ggml_metal_graph_compute(

if (ggml_nelements(src1) == ne10) {

// src1 is a row

+ GGML_ASSERT(ne11 == 1);

[encoder setComputePipelineState:ctx->pipeline_add_row];

} else {

[encoder setComputePipelineState:ctx->pipeline_add];

@@ -721,6 +753,7 @@ void ggml_metal_graph_compute(

case GGML_OP_MUL:

{

GGML_ASSERT(ggml_is_contiguous(src0));

+ GGML_ASSERT(ggml_is_contiguous(src1));

// utilize float4

GGML_ASSERT(ne00 % 4 == 0);

@@ -728,6 +761,7 @@ void ggml_metal_graph_compute(

if (ggml_nelements(src1) == ne10) {

// src1 is a row

+ GGML_ASSERT(ne11 == 1);

[encoder setComputePipelineState:ctx->pipeline_mul_row];

} else {

[encoder setComputePipelineState:ctx->pipeline_mul];

@@ -743,6 +777,8 @@ void ggml_metal_graph_compute(

} break;

case GGML_OP_SCALE:

{

+ GGML_ASSERT(ggml_is_contiguous(src0));

+

const float scale = *(const float *) src1->data;

[encoder setComputePipelineState:ctx->pipeline_scale];

@@ -750,7 +786,7 @@ void ggml_metal_graph_compute(

[encoder setBuffer:id_dst offset:offs_dst atIndex:1];

[encoder setBytes:&scale length:sizeof(scale) atIndex:2];

- const int64_t n = ggml_nelements(dst);

+ const int64_t n = ggml_nelements(dst)/4;

[encoder dispatchThreadgroups:MTLSizeMake(n, 1, 1) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

} break;

@@ -762,7 +798,7 @@ void ggml_metal_graph_compute(

[encoder setBuffer:id_src0 offset:offs_src0 atIndex:0];

[encoder setBuffer:id_dst offset:offs_dst atIndex:1];

- const int64_t n = ggml_nelements(dst);

+ const int64_t n = ggml_nelements(dst)/4;

[encoder dispatchThreadgroups:MTLSizeMake(n, 1, 1) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

} break;

@@ -782,7 +818,7 @@ void ggml_metal_graph_compute(

[encoder setBuffer:id_src0 offset:offs_src0 atIndex:0];

[encoder setBuffer:id_dst offset:offs_dst atIndex:1];

- const int64_t n = ggml_nelements(dst);

+ const int64_t n = ggml_nelements(dst)/4;

[encoder dispatchThreadgroups:MTLSizeMake(n, 1, 1) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

} break;

@@ -796,13 +832,16 @@ void ggml_metal_graph_compute(

{

const int nth = 32;

- [encoder setComputePipelineState:ctx->pipeline_soft_max];

+ if (ne00%4 == 0) {

+ [encoder setComputePipelineState:ctx->pipeline_soft_max_4];

+ } else {

+ [encoder setComputePipelineState:ctx->pipeline_soft_max];

+ }

[encoder setBuffer:id_src0 offset:offs_src0 atIndex:0];

[encoder setBuffer:id_dst offset:offs_dst atIndex:1];

[encoder setBytes:&ne00 length:sizeof(ne00) atIndex:2];

[encoder setBytes:&ne01 length:sizeof(ne01) atIndex:3];

[encoder setBytes:&ne02 length:sizeof(ne02) atIndex:4];

- [encoder setThreadgroupMemoryLength:nth*sizeof(float) atIndex:0];

[encoder dispatchThreadgroups:MTLSizeMake(ne01, ne02, ne03) threadsPerThreadgroup:MTLSizeMake(nth, 1, 1)];

} break;

@@ -810,14 +849,23 @@ void ggml_metal_graph_compute(

{

const int n_past = ((int32_t *)(dst->op_params))[0];

- [encoder setComputePipelineState:ctx->pipeline_diag_mask_inf];

+ if (ne00%8 == 0) {

+ [encoder setComputePipelineState:ctx->pipeline_diag_mask_inf_8];

+ } else {

+ [encoder setComputePipelineState:ctx->pipeline_diag_mask_inf];

+ }

[encoder setBuffer:id_src0 offset:offs_src0 atIndex:0];

[encoder setBuffer:id_dst offset:offs_dst atIndex:1];

[encoder setBytes:&ne00 length:sizeof(ne00) atIndex:2];

[encoder setBytes:&ne01 length:sizeof(ne01) atIndex:3];

[encoder setBytes:&n_past length:sizeof(int) atIndex:4];

- [encoder dispatchThreadgroups:MTLSizeMake(ne00, ne01, ne02) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

+ if (ne00%8 == 0) {

+ [encoder dispatchThreadgroups:MTLSizeMake(ne00*ne01*ne02/8, 1, 1) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

+ }

+ else {

+ [encoder dispatchThreadgroups:MTLSizeMake(ne00, ne01, ne02) threadsPerThreadgroup:MTLSizeMake(1, 1, 1)];

+ }

} break;

case GGML_OP_MUL_MAT:

{

@@ -830,8 +878,8 @@ void ggml_metal_graph_compute(

// for now the matrix-matrix multiplication kernel only works on A14+/M1+ SoCs

// AMD GPU and older A-chips will reuse matrix-vector multiplication kernel

- if (ggml_is_contiguous(src0) &&

- ggml_is_contiguous(src1) &&

+ if (!ggml_is_transposed(src0) &&

+ !ggml_is_transposed(src1) &&

src1t == GGML_TYPE_F32 &&

[ctx->device supportsFamily:MTLGPUFamilyApple7] &&

ne00%32 == 0 &&

@@ -856,14 +904,18 @@ void ggml_metal_graph_compute(

[encoder setBytes:&nb01 length:sizeof(nb01) atIndex:5];

[encoder setBytes:&nb02 length:sizeof(nb02) atIndex:6];

[encoder setBytes:&ne12 length:sizeof(ne12) atIndex:7];

- [encoder setBytes:&ne0 length:sizeof(ne0) atIndex:8];

- [encoder setBytes:&ne1 length:sizeof(ne1) atIndex:9];

- [encoder setBytes:&gqa length:sizeof(gqa) atIndex:10];

+ [encoder setBytes:&nb10 length:sizeof(nb10) atIndex:8];

+ [encoder setBytes:&nb11 length:sizeof(nb11) atIndex:9];

+ [encoder setBytes:&nb12 length:sizeof(nb12) atIndex:10];

+ [encoder setBytes:&ne0 length:sizeof(ne0) atIndex:11];

+ [encoder setBytes:&ne1 length:sizeof(ne1) atIndex:12];

+ [encoder setBytes:&gqa length:sizeof(gqa) atIndex:13];

[encoder setThreadgroupMemoryLength:8192 atIndex:0];

[encoder dispatchThreadgroups:MTLSizeMake( (ne11+31)/32, (ne01+63) / 64, ne12) threadsPerThreadgroup:MTLSizeMake(128, 1, 1)];

} else {

int nth0 = 32;

int nth1 = 1;

+ int nrows = 1;

// use custom matrix x vector kernel

switch (src0t) {

@@ -873,8 +925,14 @@ void ggml_metal_graph_compute(

nth1 = 1;

if (ne11 * ne12 < 4) {

[encoder setComputePipelineState:ctx->pipeline_mul_mat_f16_f32_1row];

+ //} else if (ne00 >= 128 && ne01 >= 8 && ne00%4 == 0) {

+ } else if (false) {

+ // TODO: with ggml_mul_mat_pad this kernel no longer seems to be needed

+ [encoder setComputePipelineState:ctx->pipeline_mul_mat_f16_f32_l4];

+ nrows = ne11;

} else {

[encoder setComputePipelineState:ctx->pipeline_mul_mat_f16_f32];

+ nrows = 4;

}

} break;

case GGML_TYPE_Q4_0:

@@ -995,7 +1053,7 @@ void ggml_metal_graph_compute(

else if (src0t == GGML_TYPE_Q6_K) {

[encoder dispatchThreadgroups:MTLSizeMake((ne01 + 1)/2, ne11, ne12) threadsPerThreadgroup:MTLSizeMake(nth0, nth1, 1)];

} else {

- int64_t ny = (ne11 + 3)/4;

+ int64_t ny = (ne11 + nrows - 1)/nrows;

[encoder dispatchThreadgroups:MTLSizeMake(ne01, ny, ne12) threadsPerThreadgroup:MTLSizeMake(nth0, nth1, 1)];

}

}

@@ -1003,6 +1061,7 @@ void ggml_metal_graph_compute(

case GGML_OP_GET_ROWS:

{

switch (src0->type) {

+ case GGML_TYPE_F32: [encoder setComputePipelineState:ctx->pipeline_get_rows_f32]; break;

case GGML_TYPE_F16: [encoder setComputePipelineState:ctx->pipeline_get_rows_f16]; break;

case GGML_TYPE_Q4_0: [encoder setComputePipelineState:ctx->pipeline_get_rows_q4_0]; break;

case GGML_TYPE_Q4_1: [encoder setComputePipelineState:ctx->pipeline_get_rows_q4_1]; break;

@@ -1018,9 +1077,9 @@ void ggml_metal_graph_compute(

[encoder setBuffer:id_src0 offset:offs_src0 atIndex:0];

[encoder setBuffer:id_src1 offset:offs_src1 atIndex:1];

[encoder setBuffer:id_dst offset:offs_dst atIndex:2];

- [encoder setBytes:&(src0->ne[0]) length:sizeof( int64_t) atIndex:3];

- [encoder setBytes:&(src0->nb[1]) length:sizeof(uint64_t) atIndex:4];

- [encoder setBytes:&(dst->nb[1]) length:sizeof(uint64_t) atIndex:5];

+ [encoder setBytes:&ne00 length:sizeof( int64_t) atIndex:3];

+ [encoder setBytes:&nb01 length:sizeof(uint64_t) atIndex:4];

+ [encoder setBytes:&nb1 length:sizeof(uint64_t) atIndex:5];

const int64_t n = ggml_nelements(src1);

diff --git a/ggml-metal.metal b/ggml-metal.metal

index 5070561..0db037c 100644

--- a/ggml-metal.metal

+++ b/ggml-metal.metal

@@ -38,7 +38,7 @@ kernel void kernel_add_row(

device const float4 * src0,

device const float4 * src1,

device float4 * dst,

- constant int64_t & nb,

+ constant int64_t & nb,

uint tpig[[thread_position_in_grid]]) {

dst[tpig] = src0[tpig] + src1[tpig % nb];

}

@@ -63,18 +63,18 @@ kernel void kernel_mul_row(

}

kernel void kernel_scale(

- device const float * src0,

- device float * dst,

+ device const float4 * src0,

+ device float4 * dst,

constant float & scale,

uint tpig[[thread_position_in_grid]]) {

dst[tpig] = src0[tpig] * scale;

}

kernel void kernel_silu(

- device const float * src0,

- device float * dst,

+ device const float4 * src0,

+ device float4 * dst,

uint tpig[[thread_position_in_grid]]) {

- float x = src0[tpig];

+ device const float4 & x = src0[tpig];

dst[tpig] = x / (1.0f + exp(-x));

}

@@ -89,10 +89,10 @@ constant float GELU_COEF_A = 0.044715f;

constant float SQRT_2_OVER_PI = 0.79788456080286535587989211986876f;

kernel void kernel_gelu(

- device const float * src0,

- device float * dst,

+ device const float4 * src0,

+ device float4 * dst,

uint tpig[[thread_position_in_grid]]) {

- float x = src0[tpig];

+ device const float4 & x = src0[tpig];

// BEWARE !!!

// Simply using "tanh" instead of "precise::tanh" will sometimes results in NaNs!

@@ -107,7 +107,6 @@ kernel void kernel_soft_max(

constant int64_t & ne00,

constant int64_t & ne01,

constant int64_t & ne02,

- threadgroup float * buf [[threadgroup(0)]],

uint3 tgpig[[threadgroup_position_in_grid]],

uint3 tpitg[[thread_position_in_threadgroup]],

uint3 ntg[[threads_per_threadgroup]]) {

@@ -119,64 +118,70 @@ kernel void kernel_soft_max(

device float * pdst = dst + i03*ne02*ne01*ne00 + i02*ne01*ne00 + i01*ne00;

// parallel max

- buf[tpitg[0]] = -INFINITY;

- for (int i00 = tpitg[0]; i00 < ne00; i00 += ntg[0]) {

- buf[tpitg[0]] = MAX(buf[tpitg[0]], psrc0[i00]);

+ float lmax = psrc0[tpitg[0]];

+ for (int i00 = tpitg[0] + ntg[0]; i00 < ne00; i00 += ntg[0]) {

+ lmax = MAX(lmax, psrc0[i00]);

}

-

- // reduce

- threadgroup_barrier(mem_flags::mem_threadgroup);

- for (uint i = ntg[0]/2; i > 0; i /= 2) {

- if (tpitg[0] < i) {

- buf[tpitg[0]] = MAX(buf[tpitg[0]], buf[tpitg[0] + i]);

- }

- threadgroup_barrier(mem_flags::mem_threadgroup);

- }

-

- //// broadcast - not needed. There is a threadgroup barrier above in the last iteration of

- // the loop, and when that is done, buf[0] has the correct (synchronized) value

- //if (tpitg[0] == 0) {

- // buf[0] = buf[0];

- //}

-

- //threadgroup_barrier(mem_flags::mem_threadgroup);

-

- const float max = buf[0];

+ const float max = simd_max(lmax);

// parallel sum

- buf[tpitg[0]] = 0.0f;

+ float lsum = 0.0f;

for (int i00 = tpitg[0]; i00 < ne00; i00 += ntg[0]) {

const float exp_psrc0 = exp(psrc0[i00] - max);

- buf[tpitg[0]] += exp_psrc0;

+ lsum += exp_psrc0;

// Remember the result of exp here. exp is expensive, so we really do not

// whish to compute it twice.

pdst[i00] = exp_psrc0;

}

- // reduce

- threadgroup_barrier(mem_flags::mem_threadgroup);

- for (uint i = ntg[0]/2; i > 0; i /= 2) {

- if (tpitg[0] < i) {

- buf[tpitg[0]] += buf[tpitg[0] + i];

- }

- threadgroup_barrier(mem_flags::mem_threadgroup);

- }

-

- // broadcast - not needed, see above

- //// broadcast

- //if (tpitg[0] == 0) {

- // buf[0] = buf[0];

- //}

-

- //threadgroup_barrier(mem_flags::mem_threadgroup);

-

- const float sum = buf[0];

+ const float sum = simd_sum(lsum);

for (int i00 = tpitg[0]; i00 < ne00; i00 += ntg[0]) {

pdst[i00] /= sum;

}

}

+kernel void kernel_soft_max_4(

+ device const float * src0,

+ device float * dst,

+ constant int64_t & ne00,

+ constant int64_t & ne01,

+ constant int64_t & ne02,

+ uint3 tgpig[[threadgroup_position_in_grid]],

+ uint3 tpitg[[thread_position_in_threadgroup]],

+ uint3 ntg[[threads_per_threadgroup]]) {

+ const int64_t i03 = tgpig[2];

+ const int64_t i02 = tgpig[1];

+ const int64_t i01 = tgpig[0];

+

+ device const float4 * psrc4 = (device const float4 *)(src0 + i03*ne02*ne01*ne00 + i02*ne01*ne00 + i01*ne00);

+ device float4 * pdst4 = (device float4 *)(dst + i03*ne02*ne01*ne00 + i02*ne01*ne00 + i01*ne00);

+

+ // parallel max

+ float4 lmax4 = psrc4[tpitg[0]];

+ for (int i00 = tpitg[0] + ntg[0]; i00 < ne00/4; i00 += ntg[0]) {

+ lmax4 = fmax(lmax4, psrc4[i00]);

+ }

+ float lmax = MAX(MAX(lmax4[0], lmax4[1]), MAX(lmax4[2], lmax4[3]));

+

+ const float max = simd_max(lmax);

+

+ // parallel sum

+ float4 lsum4 = 0.0f;

+ for (int i00 = tpitg[0]; i00 < ne00/4; i00 += ntg[0]) {

+ const float4 exp_psrc4 = exp(psrc4[i00] - max);

+ lsum4 += exp_psrc4;

+ pdst4[i00] = exp_psrc4;

+ }

+ float lsum = lsum4[0] + lsum4[1] + lsum4[2] + lsum4[3];

+

+ const float sum = simd_sum(lsum);

+

+ for (int i00 = tpitg[0]; i00 < ne00/4; i00 += ntg[0]) {

+ pdst4[i00] /= sum;

+ }

+}

+

kernel void kernel_diag_mask_inf(

device const float * src0,

device float * dst,

@@ -192,6 +197,33 @@ kernel void kernel_diag_mask_inf(

dst[i02*ne01*ne00 + i01*ne00 + i00] = -INFINITY;

} else {

dst[i02*ne01*ne00 + i01*ne00 + i00] = src0[i02*ne01*ne00 + i01*ne00 + i00];

+ }

+}

+

+kernel void kernel_diag_mask_inf_8(

+ device const float4 * src0,

+ device float4 * dst,

+ constant int64_t & ne00,

+ constant int64_t & ne01,

+ constant int & n_past,

+ uint3 tpig[[thread_position_in_grid]]) {

+

+ const int64_t i = 2*tpig[0];

+

+ dst[i+0] = src0[i+0];

+ dst[i+1] = src0[i+1];

+ int64_t i4 = 4*i;

+ const int64_t i02 = i4/(ne00*ne01); i4 -= i02*ne00*ne01;

+ const int64_t i01 = i4/(ne00); i4 -= i01*ne00;

+ const int64_t i00 = i4;

+ for (int k = 3; k >= 0; --k) {

+ if (i00 + 4 + k <= n_past + i01) {

+ break;

+ }

+ dst[i+1][k] = -INFINITY;

+ if (i00 + k > n_past + i01) {

+ dst[i][k] = -INFINITY;

+ }

}

}

@@ -616,6 +648,49 @@ kernel void kernel_mul_mat_f16_f32(

}

}

+// Assumes row size (ne00) is a multiple of 4

+kernel void kernel_mul_mat_f16_f32_l4(

+ device const char * src0,

+ device const char * src1,

+ device float * dst,

+ constant int64_t & ne00,

+ constant int64_t & ne01,

+ constant int64_t & ne02,

+ constant uint64_t & nb00,

+ constant uint64_t & nb01,

+ constant uint64_t & nb02,

+ constant int64_t & ne10,

+ constant int64_t & ne11,

+ constant int64_t & ne12,

+ constant uint64_t & nb10,

+ constant uint64_t & nb11,

+ constant uint64_t & nb12,

+ constant int64_t & ne0,

+ constant int64_t & ne1,

+ uint3 tgpig[[threadgroup_position_in_grid]],

+ uint tiisg[[thread_index_in_simdgroup]]) {

+

+ const int nrows = ne11;

+ const int64_t r0 = tgpig.x;

+ const int64_t im = tgpig.z;

+

+ device const half4 * x4 = (device const half4 *) (src0 + r0*nb01 + im/(ne12/ne02)*nb02);

+

+ for (int r1 = 0; r1 < nrows; ++r1) {

+ device const float4 * y4 = (device const float4 *) (src1 + r1*nb11 + im*nb12);

+

+ float sumf = 0;

+ for (int i = tiisg; i < ne00/4; i += 32) {

+ for (int k = 0; k < 4; ++k) sumf += (float) x4[i][k] * y4[i][k];

+ }

+

+ float all_sum = simd_sum(sumf);

+ if (tiisg == 0) {

+ dst[im*ne1*ne0 + r1*ne0 + r0] = all_sum;

+ }

+ }

+}

+

kernel void kernel_alibi_f32(

device const float * src0,

device float * dst,

@@ -1123,31 +1198,40 @@ kernel void kernel_mul_mat_q3_K_f32(

device const block_q3_K * x = (device const block_q3_K *) src0 + first_row*nb + offset0;

device const float * yy = (device const float *) src1 + r1*ne10 + r2*ne00*ne1;

- float yl[16];

+ float yl[32];

- const uint16_t kmask1 = 0x0303;

+ const uint16_t kmask1 = 0x3030;

const uint16_t kmask2 = 0x0f0f;

- const int tid = tiisg/2;

- const int ix = tiisg%2;

- const int ip = tid/8; // 0 or 1

- const int il = tid/2 - 4*ip; // 0...3

+ const int tid = tiisg/4;

+ const int ix = tiisg%4;

+ const int ip = tid/4; // 0 or 1

+ const int il = 2*((tid%4)/2); // 0 or 2

const int ir = tid%2;

const int n = 8;

const int l0 = n*ir;

- const uint16_t m1 = 1 << (4*ip + il);

- const uint16_t m2 = m1 << 8;

+ // One would think that the Metal compiler would figure out that ip and il can only have

+ // 4 possible states, and optimize accordingly. Well, no. It needs help, and we do it

+ // with these two tales.

+ //

+ // Possible masks for the high bit

+ const ushort4 mm[4] = {{0x0001, 0x0100, 0x0002, 0x0200}, // ip = 0, il = 0

+ {0x0004, 0x0400, 0x0008, 0x0800}, // ip = 0, il = 2

+ {0x0010, 0x1000, 0x0020, 0x2000}, // ip = 1, il = 0

+ {0x0040, 0x4000, 0x0080, 0x8000}}; // ip = 1, il = 2

+

+ // Possible masks for the low 2 bits

+ const int4 qm[2] = {{0x0003, 0x0300, 0x000c, 0x0c00}, {0x0030, 0x3000, 0x00c0, 0xc000}};

+

+ const ushort4 hm = mm[2*ip + il/2];

const int shift = 2*il;

- const uint16_t qm1 = 0x0003 << shift;

- const uint16_t qm2 = 0x0300 << shift;

- const int32_t v1 = 4 << shift;

- const int32_t v2 = 1024 << shift;

+ const float v1 = il == 0 ? 4.f : 64.f;

+ const float v2 = 4.f * v1;

const uint16_t s_shift1 = 4*ip;

- const uint16_t s_shift2 = s_shift1 + 2*(il/2);

- const int ik = 4 + (il%2);

+ const uint16_t s_shift2 = s_shift1 + il;

const int q_offset = 32*ip + l0;

const int y_offset = 128*ip + 32*il + l0;

@@ -1156,12 +1240,19 @@ kernel void kernel_mul_mat_q3_K_f32(

device const float * y1 = yy + ix*QK_K + y_offset;

- float sumf1[2] = {0.f}, sumf2[2] = {0.f};

- for (int i = ix; i < nb; i += 2) {

+ uint32_t scales32, aux32;

+ thread uint16_t * scales16 = (thread uint16_t *)&scales32;

+ thread const int8_t * scales = (thread const int8_t *)&scales32;

+

+ float sumf1[2] = {0.f};

+ float sumf2[2] = {0.f};

+ for (int i = ix; i < nb; i += 4) {

for (int l = 0; l < 8; ++l) {

- yl[l+0] = y1[l+ 0];

- yl[l+8] = y1[l+16];

+ yl[l+ 0] = y1[l+ 0];

+ yl[l+ 8] = y1[l+16];

+ yl[l+16] = y1[l+32];

+ yl[l+24] = y1[l+48];

}

device const uint16_t * q = (device const uint16_t *)(x[i].qs + q_offset);

@@ -1172,27 +1263,43 @@ kernel void kernel_mul_mat_q3_K_f32(

for (int row = 0; row < 2; ++row) {

const float d_all = (float)dh[0];

- const char2 scales = as_type((uint16_t)(((a[il] >> s_shift1) & kmask2) | (((a[ik] >> s_shift2) & kmask1) << 4)));

- float s1 = 0, s2 = 0;

- for (int l = 0; l < n; l += 2) {

- const uint16_t qs = q[l/2];

- s1 += yl[l+0] * ((int32_t)(qs & qm1) - ((h[l/2] & m1) ? 0 : v1));

- s2 += yl[l+1] * ((int32_t)(qs & qm2) - ((h[l/2] & m2) ? 0 : v2));

- }

- float d = d_all * (s1 + 1.f/256.f * s2);

- sumf1[row] += d * scales[0];

- sumf2[row] += d;

+ scales16[0] = a[4];

+ scales16[1] = a[5];

+ aux32 = ((scales32 >> s_shift2) << 4) & 0x30303030;

+ scales16[0] = a[il+0];

+ scales16[1] = a[il+1];

+ scales32 = ((scales32 >> s_shift1) & 0x0f0f0f0f) | aux32;

- s1 = s2 = 0;

+ float s1 = 0, s2 = 0, s3 = 0, s4 = 0, s5 = 0, s6 = 0;

for (int l = 0; l < n; l += 2) {

- const uint16_t qs = q[l/2+8];

- s1 += yl[l+8] * ((int32_t)(qs & qm1) - ((h[l/2+8] & m1) ? 0 : v1));

- s2 += yl[l+9] * ((int32_t)(qs & qm2) - ((h[l/2+8] & m2) ? 0 : v2));

+ const int32_t qs = q[l/2];

+ s1 += yl[l+0] * (qs & qm[il/2][0]);

+ s2 += yl[l+1] * (qs & qm[il/2][1]);

+ s3 += ((h[l/2] & hm[0]) ? 0.f : yl[l+0]) + ((h[l/2] & hm[1]) ? 0.f : yl[l+1]);

+ s4 += yl[l+16] * (qs & qm[il/2][2]);

+ s5 += yl[l+17] * (qs & qm[il/2][3]);

+ s6 += ((h[l/2] & hm[2]) ? 0.f : yl[l+16]) + ((h[l/2] & hm[3]) ? 0.f : yl[l+17]);

}

- d = d_all * (s1 + 1.f/256.f * s2);

- sumf1[row] += d * scales[1];

- sumf2[row] += d;

+ float d1 = d_all * (s1 + 1.f/256.f * s2 - s3*v1);

+ float d2 = d_all * (s4 + 1.f/256.f * s5 - s6*v2);

+ sumf1[row] += d1 * (scales[0] - 32);

+ sumf2[row] += d2 * (scales[2] - 32);

+

+ s1 = s2 = s3 = s4 = s5 = s6 = 0;

+ for (int l = 0; l < n; l += 2) {

+ const int32_t qs = q[l/2+8];

+ s1 += yl[l+8] * (qs & qm[il/2][0]);

+ s2 += yl[l+9] * (qs & qm[il/2][1]);

+ s3 += ((h[l/2+8] & hm[0]) ? 0.f : yl[l+8]) + ((h[l/2+8] & hm[1]) ? 0.f : yl[l+9]);

+ s4 += yl[l+24] * (qs & qm[il/2][2]);

+ s5 += yl[l+25] * (qs & qm[il/2][3]);

+ s6 += ((h[l/2+8] & hm[2]) ? 0.f : yl[l+24]) + ((h[l/2+8] & hm[3]) ? 0.f : yl[l+25]);

+ }

+ d1 = d_all * (s1 + 1.f/256.f * s2 - s3*v1);

+ d2 = d_all * (s4 + 1.f/256.f * s5 - s6*v2);

+ sumf1[row] += d1 * (scales[1] - 32);

+ sumf2[row] += d2 * (scales[3] - 32);

q += step;

h += step;

@@ -1201,15 +1308,17 @@ kernel void kernel_mul_mat_q3_K_f32(

}

- y1 += 2 * QK_K;

+ y1 += 4 * QK_K;

}

for (int row = 0; row < 2; ++row) {

- const float sumf = (sumf1[row] - 32.f*sumf2[row]) / (1 << shift);

- const float tot = simd_sum(sumf);

- if (tiisg == 0) {

- dst[r1*ne0 + r2*ne0*ne1 + first_row + row] = tot;

+ const float sumf = (sumf1[row] + 0.25f * sumf2[row]) / (1 << shift);

+ sumf1[row] = simd_sum(sumf);

+ }

+ if (tiisg == 0) {

+ for (int row = 0; row < 2; ++row) {

+ dst[r1*ne0 + r2*ne0*ne1 + first_row + row] = sumf1[row];

}

}

}

@@ -1564,17 +1673,25 @@ kernel void kernel_mul_mat_q5_K_f32(

sc16[2] = ((a[4] >> 0) & kmask2) | ((a[0] & kmask3) >> 2);

sc16[3] = ((a[4] >> 4) & kmask2) | ((a[2] & kmask3) >> 2);

- float4 acc = {0.f, 0.f, 0.f, 0.f};

+ float4 acc1 = {0.f};

+ float4 acc2 = {0.f};

for (int l = 0; l < n; ++l) {

uint8_t h = qh[l];

- acc[0] += yl[l+0] * ((uint16_t)(q1[l] & 0x0F) + (h & hm1 ? 16 : 0));

- acc[1] += yl[l+8] * ((uint16_t)(q1[l] & 0xF0) + (h & hm2 ? 256 : 0));

- acc[2] += yh[l+0] * ((uint16_t)(q2[l] & 0x0F) + (h & hm3 ? 16 : 0));

- acc[3] += yh[l+8] * ((uint16_t)(q2[l] & 0xF0) + (h & hm4 ? 256 : 0));

+ acc1[0] += yl[l+0] * (q1[l] & 0x0F);

+ acc1[1] += yl[l+8] * (q1[l] & 0xF0);

+ acc1[2] += yh[l+0] * (q2[l] & 0x0F);

+ acc1[3] += yh[l+8] * (q2[l] & 0xF0);

+ acc2[0] += h & hm1 ? yl[l+0] : 0.f;

+ acc2[1] += h & hm2 ? yl[l+8] : 0.f;

+ acc2[2] += h & hm3 ? yh[l+0] : 0.f;

+ acc2[3] += h & hm4 ? yh[l+8] : 0.f;

}

const float dall = dh[0];

const float dmin = dh[1];

- sumf[row] += dall * (acc[0] * sc8[0] + acc[1] * sc8[1] * 1.f/16.f + acc[2] * sc8[4] + acc[3] * sc8[5] * 1.f/16.f) -

+ sumf[row] += dall * (sc8[0] * (acc1[0] + 16.f*acc2[0]) +

+ sc8[1] * (acc1[1]/16.f + 16.f*acc2[1]) +

+ sc8[4] * (acc1[2] + 16.f*acc2[2]) +

+ sc8[5] * (acc1[3]/16.f + 16.f*acc2[3])) -

dmin * (sumy[0] * sc8[2] + sumy[1] * sc8[3] + sumy[2] * sc8[6] + sumy[3] * sc8[7]);

q1 += step;

@@ -1747,6 +1864,15 @@ kernel void kernel_mul_mat_q6_K_f32(

//============================= templates and their specializations =============================

+// NOTE: this is not dequantizing - we are simply fitting the template

+template

+void dequantize_f32(device const float4x4 * src, short il, thread type4x4 & reg) {

+ float4x4 temp = *(((device float4x4 *)src));

+ for (int i = 0; i < 16; i++){

+ reg[i/4][i%4] = temp[i/4][i%4];

+ }

+}

+

template

void dequantize_f16(device const half4x4 * src, short il, thread type4x4 & reg) {

half4x4 temp = *(((device half4x4 *)src));

@@ -1758,28 +1884,30 @@ void dequantize_f16(device const half4x4 * src, short il, thread type4x4 & reg)

template

void dequantize_q4_0(device const block_q4_0 *xb, short il, thread type4x4 & reg) {

device const uint16_t * qs = ((device const uint16_t *)xb + 1);

- const half d = il ? (xb->d / 16.h) : xb->d;

- const half m = il ? ( -8.h * 16.h) : -8.h;

+ const float d1 = il ? (xb->d / 16.h) : xb->d;

+ const float d2 = d1 / 256.f;

+ const float md = -8.h * xb->d;

const ushort mask0 = il ? 0x00F0 : 0x000F;

- const ushort mask1 = il ? 0xF000 : 0x0F00;

+ const ushort mask1 = mask0 << 8;

for (int i=0;i<8;i++) {

- reg[i/2][2*(i%2)] = (((qs[i] & mask0) ) + m) * d;

- reg[i/2][2*(i%2)+1] = (((qs[i] & mask1) >> 8) + m) * d;

+ reg[i/2][2*(i%2)+0] = d1 * (qs[i] & mask0) + md;

+ reg[i/2][2*(i%2)+1] = d2 * (qs[i] & mask1) + md;

}

}

template

void dequantize_q4_1(device const block_q4_1 *xb, short il, thread type4x4 & reg) {

device const uint16_t * qs = ((device const uint16_t *)xb + 2);

- const half d = il ? (xb->d / 16.h) : xb->d;

- const half m = xb->m;

+ const float d1 = il ? (xb->d / 16.h) : xb->d;

+ const float d2 = d1 / 256.f;

+ const float m = xb->m;

const ushort mask0 = il ? 0x00F0 : 0x000F;

- const ushort mask1 = il ? 0xF000 : 0x0F00;

+ const ushort mask1 = mask0 << 8;

for (int i=0;i<8;i++) {

- reg[i/2][2*(i%2)] = (((qs[i] & mask0) ) * d) + m;

- reg[i/2][2*(i%2)+1] = (((qs[i] & mask1) >> 8) * d) + m;

+ reg[i/2][2*(i%2)+0] = ((qs[i] & mask0) * d1) + m;

+ reg[i/2][2*(i%2)+1] = ((qs[i] & mask1) * d2) + m;

}

}

@@ -1815,7 +1943,7 @@ void dequantize_q2_K(device const block_q2_K *xb, short il, thread type4x4 & reg

template

void dequantize_q3_K(device const block_q3_K *xb, short il, thread type4x4 & reg) {

- const float d_all = (float)(xb->d);

+ const half d_all = xb->d;

device const uint8_t * q = (device const uint8_t *)xb->qs;

device const uint8_t * h = (device const uint8_t *)xb->hmask;

device const int8_t * scales = (device const int8_t *)xb->scales;

@@ -1828,16 +1956,18 @@ void dequantize_q3_K(device const block_q3_K *xb, short il, thread type4x4 & reg

((il/4)>0 ? 12 : 3);

uint16_t kmask2 = il/8 ? 0xF0 : 0x0F;

uint16_t scale_2 = scales[il%8], scale_1 = scales[8 + il%4];

- int16_t dl_int = (il/4)&1 ? (scale_2&kmask2) | ((scale_1&kmask1) << 2) : \

- (scale_2&kmask2) | ((scale_1&kmask1) << 4);